Anil Dash argued that today’s AI is unreasonable:

Amongst engineers, coders, technical architects, and product designers, one of the most important traits that a system can have is that one can reason about that system in a consistent and predictable way. Even “garbage in, garbage out” is an articulation of this principle — a system should be predictable enough in its operation that we can then rely on it when building other systems upon it.

This core concept of a system being reason-able is pervasive in the intellectual architecture of true technologies. Postel’s Law (“Be liberal in what you accept, and conservative in what you send.”) depends on reasonable-ness. The famous IETF keywords list, which offers a specific technical definition for terms like “MUST”, “MUST NOT”, “SHOULD”, and “SHOULD NOT”, assumes that a system will behave in a reasonable and predictable way, and the entire internet runs on specifications that sit on top of that assumption.

The very act of debugging assumes that a system is meant to work in a particular way, with repeatable outputs, and that deviations from those expectations are the manifestation of that bug, which is why being able to reproduce a bug is the very first step to debugging.

Into that world, let’s introduce bullshit. Today’s highly-hyped generative AI systems (most famously OpenAI) are designed to generate bullshit by design.

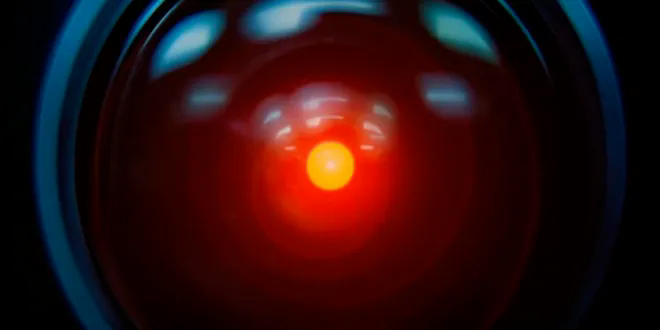

I would be very slow and careful in deciding to run AI systems in mission critical applications. We all know how 2001: A Space Odyssey ends.